Whisper

Harness large-scale weak supervision for precise, multilingual speech recognition and translation with Whisper.

Whisper is a versatile speech recognition model designed to handle diverse audio inputs with high accuracy. Trained on a vast dataset, it excels in multilingual speech recognition, translation, and language identification. This model uses a Transformer sequence-to-sequence architecture, allowing it to perform multiple tasks simultaneously, replacing traditional speech-processing pipelines.

Installation is straightforward with compatibility for Python 3.8-3.11 and recent PyTorch versions. Use pip install -U openai-whisper to get started. Whisper supports various model sizes, offering trade-offs between speed and accuracy, with the turbo model providing fast transcription.

For command-line usage, simply run whisper audio.flac --model turbo to transcribe English audio. For non-English translations, use multilingual models like medium or large. Whisper also integrates seamlessly into Python scripts, enabling programmatic access to its transcription capabilities.

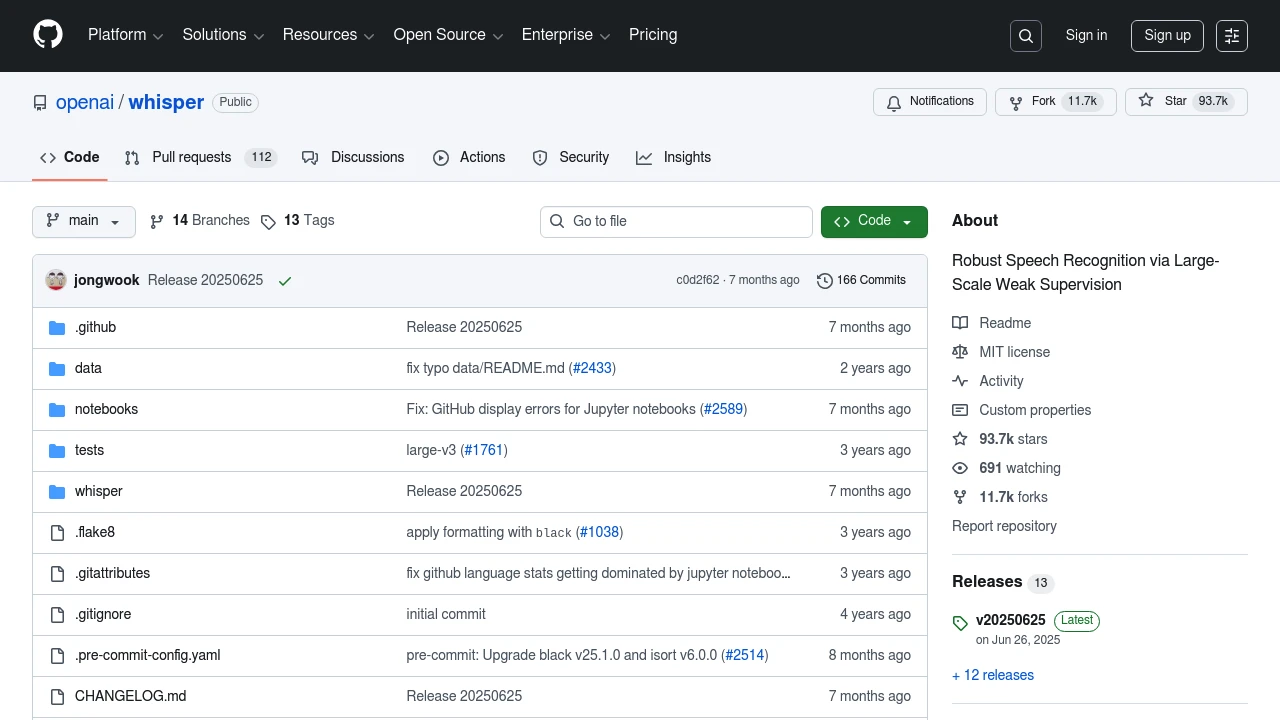

Whisper's performance varies by language, with detailed metrics available for different models. The code and model weights are released under the MIT License, encouraging community contributions and integrations.