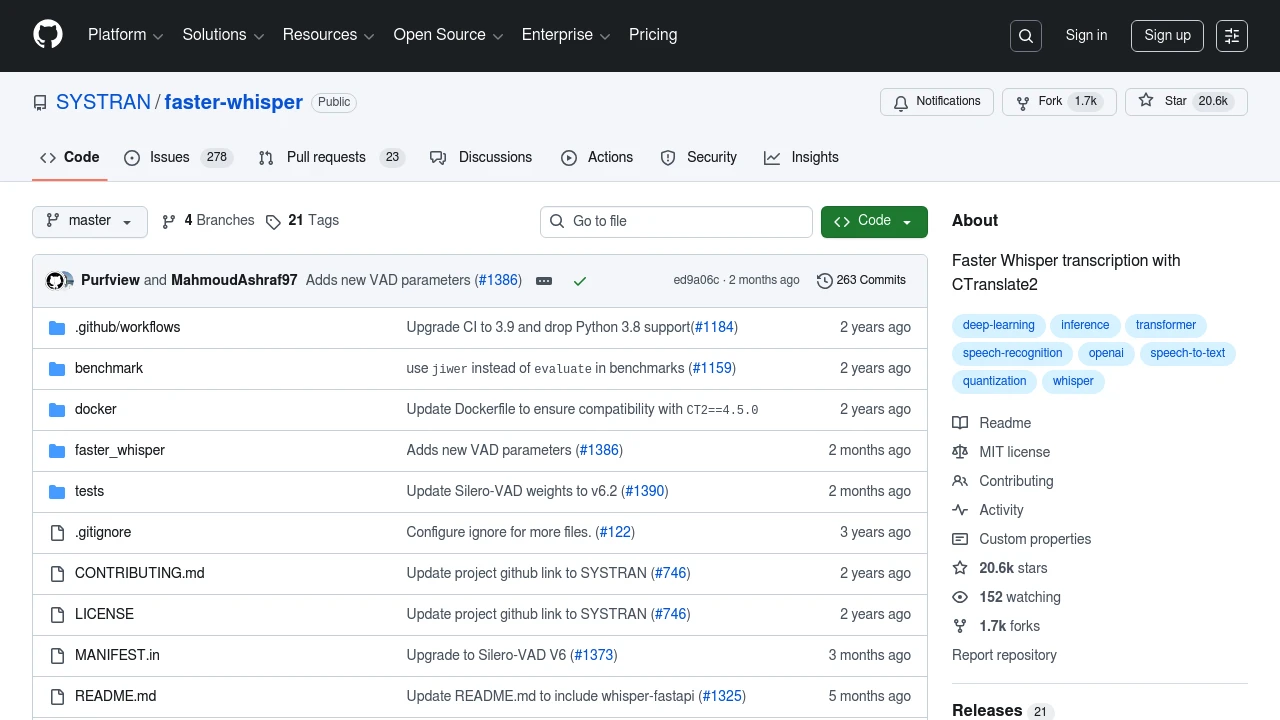

Faster Whisper

Experience rapid, efficient transcription using CTranslate2 for faster results and reduced memory usage.

Faster Whisper leverages CTranslate2 to enhance OpenAI's Whisper model, delivering up to 4x speed improvements while maintaining accuracy and reducing memory usage. This implementation supports 8-bit quantization on both CPU and GPU, further boosting efficiency.

Key benefits include:

- Speed: Transcribe audio significantly faster than traditional methods.

- Efficiency: Lower memory consumption with high precision.

- Flexibility: Supports various precisions and batch sizes for tailored performance.

The tool is compatible with Python 3.9+, and does not require FFmpeg, simplifying setup. GPU execution is supported with necessary NVIDIA libraries. Installation is straightforward via pip, and the tool provides comprehensive options for model conversion and integration with other projects. Whether for real-time transcription or batch processing, Faster Whisper offers a robust solution for developers and researchers alike.