TensorRT-LLM

Enhance LLM performance with Python API and NVIDIA GPU optimizations for efficient inference.

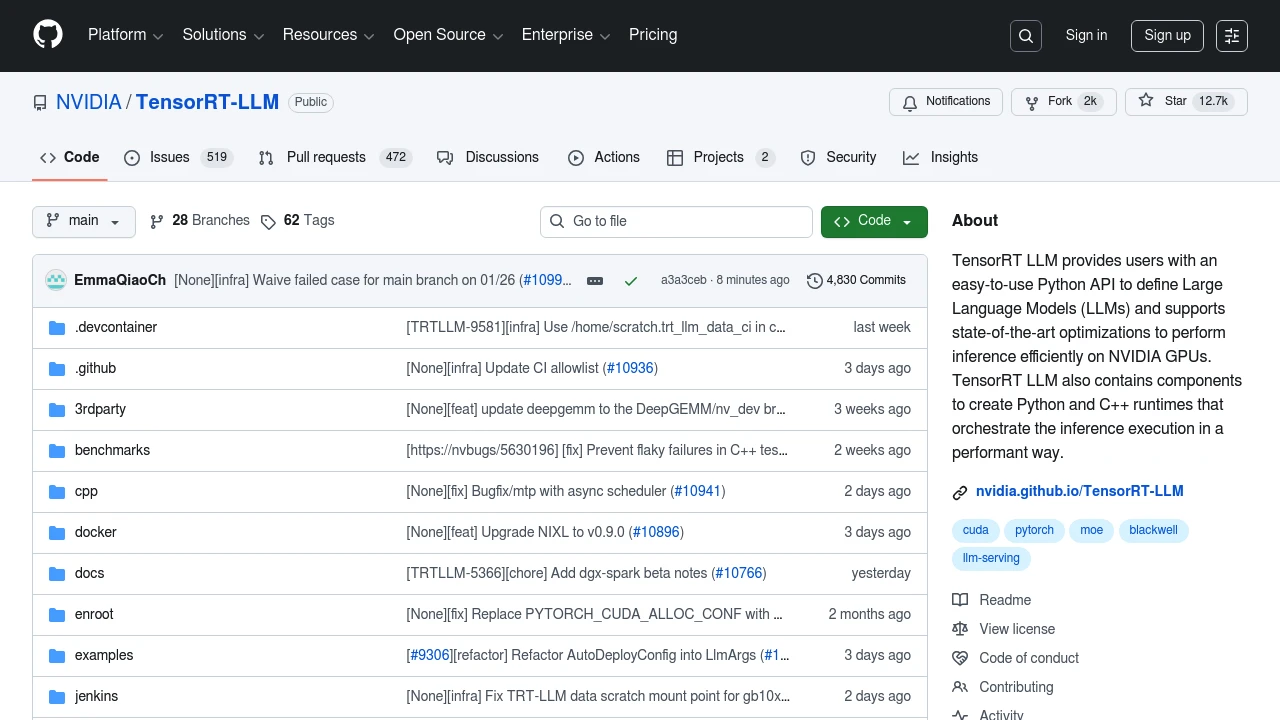

TensorRT LLM offers a user-friendly Python API for defining Large Language Models (LLMs) with advanced optimizations for NVIDIA GPUs. This tool ensures efficient inference by leveraging state-of-the-art techniques like custom attention kernels, inflight batching, and quantization. It supports both Python and C++ runtimes, allowing for high-performance orchestration of inference execution.

With built-in support for various parallelism strategies, TensorRT LLM is versatile, handling setups from single-GPU to multi-node deployments. Its modular design and PyTorch-native architecture make it easy to adapt and extend, providing a seamless integration with the broader NVIDIA inference ecosystem. Popular models are pre-defined, allowing for quick customization to meet specific needs. Whether you're deploying on a single machine or across a data center, TensorRT LLM ensures your LLMs run efficiently and effectively.