SGLang

Deliver low-latency, high-throughput inference for AI models. Supports diverse hardware and model types with extensive community backing.

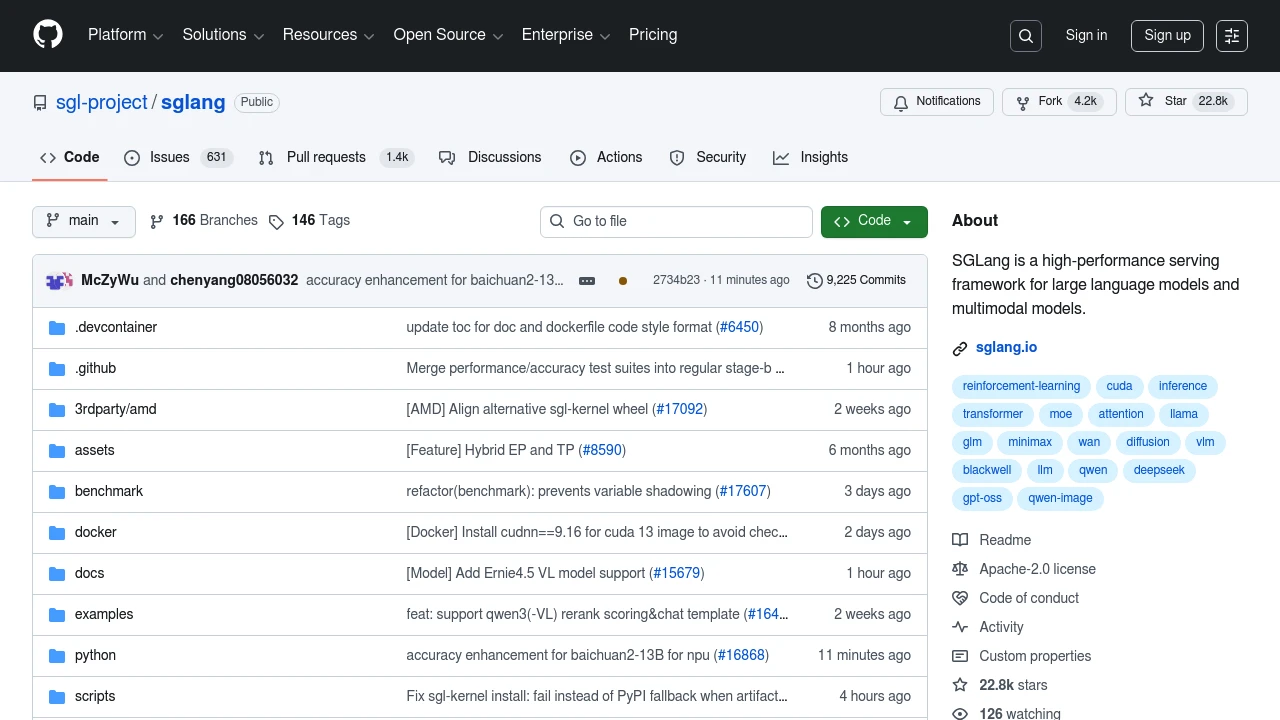

SGLang is a high-performance serving framework designed for large language models and multimodal models. It ensures low-latency and high-throughput inference, adaptable from single GPU setups to large distributed clusters. Key features include:

- Fast Runtime: Efficient serving with RadixAttention, zero-overhead CPU scheduler, and various parallelism techniques.

- Broad Model Support: Compatible with numerous models like Llama, GPT, and more, plus easy extensibility for new models.

- Extensive Hardware Compatibility: Runs on NVIDIA, AMD, Intel, Google TPUs, and more.

- Active Community: Open-source with widespread industry adoption, powering over 400,000 GPUs globally.

SGLang is trusted by leading enterprises and institutions worldwide, making it the de facto standard for AI model serving.

Ad