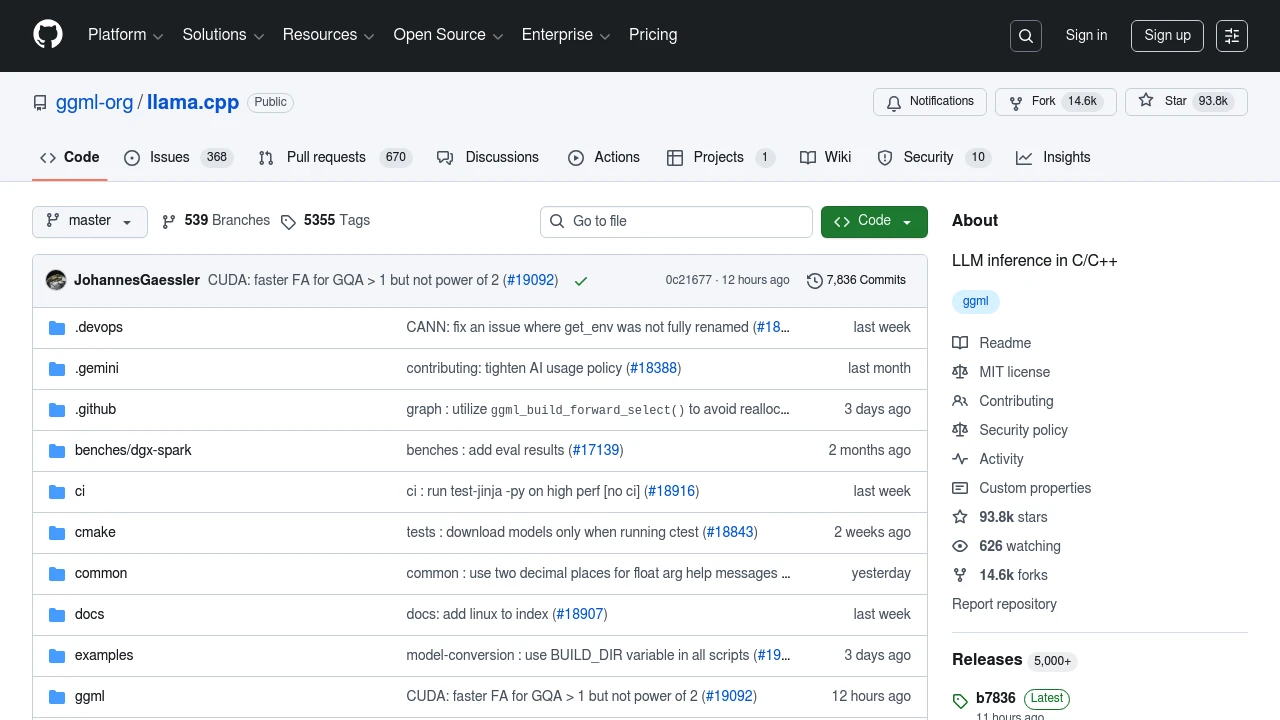

Llama.cpp

Achieve high-performance LLM inference with minimal setup using C/C++. Supports diverse hardware and quantization for optimal efficiency.

llama.cpp offers state-of-the-art LLM inference capabilities with a focus on minimal setup and high performance across various hardware platforms. This plain C/C++ implementation is dependency-free, making it versatile for both local and cloud environments.

Key features include:

- Optimized for Apple Silicon with ARM NEON and Metal frameworks.

- Support for x86 architectures with AVX, AVX2, AVX512, and AMX.

- RISC-V architecture support with RVV and other extensions.

- Integer quantization from 1.5-bit to 8-bit for faster inference and reduced memory usage.

- Custom CUDA kernels for NVIDIA GPUs, with AMD GPU support via HIP.

- Vulkan and SYCL backends for broader compatibility.

- Hybrid CPU+GPU inference to handle models larger than VRAM capacity.

llama.cpp is a robust platform for developing and deploying LLMs, supporting a wide range of models and offering tools for model conversion and quantization. Whether you're running models locally or in the cloud, llama.cpp provides the flexibility and performance needed for modern AI applications.

Categories:

Ad