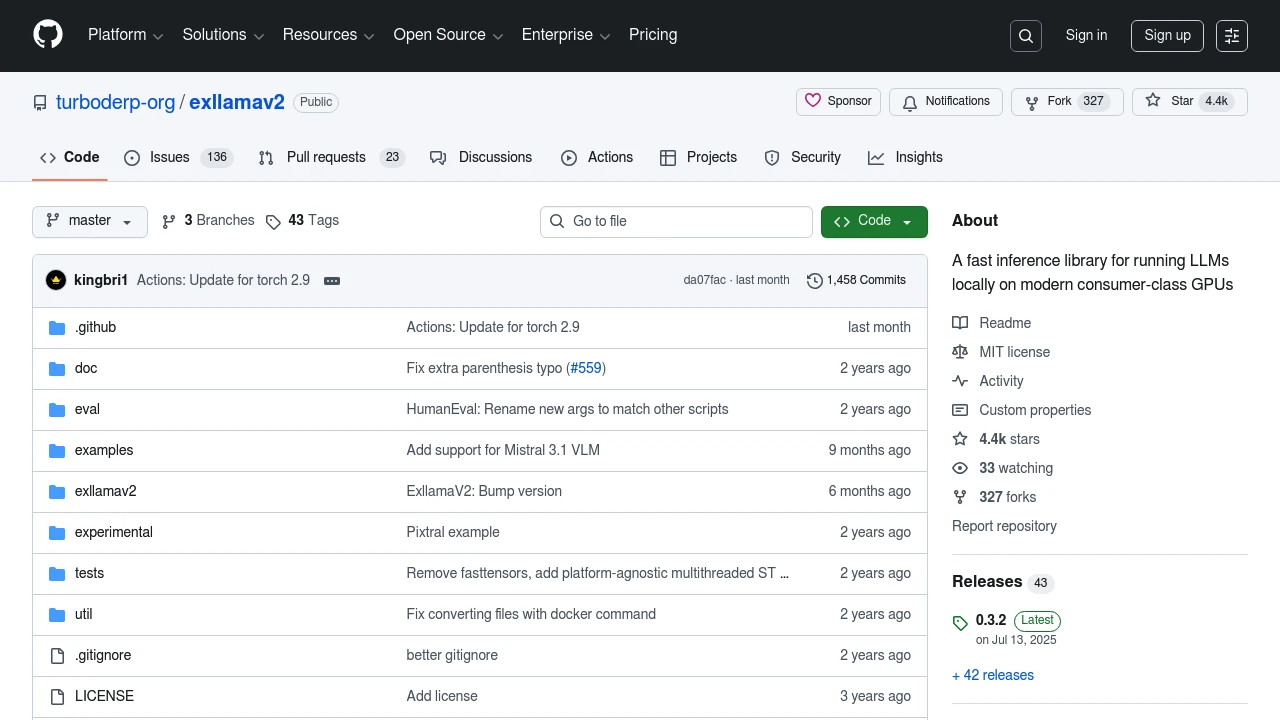

ExLlamaV2

Fast inference library for local LLMs on consumer GPUs. Supports dynamic batching, smart caching, and more.

ExLlamaV2 offers a powerful solution for running large language models (LLMs) locally on modern consumer-class GPUs. Enjoy fast inference with features like dynamic batching, smart prompt caching, and key-value cache deduplication. The library supports a variety of models and quantization formats, including the new "EXL2" format, allowing for efficient use of GPU resources.

Key Features:

- Paged Attention: Enhanced performance with support for paged attention.

- Dynamic Generator: Consolidates multiple inference features into a single API.

- Flexible Installation: Install from source, release, or PyPI with ease.

- Integration Ready: Compatible with FastAPI for web API deployment.

Whether you're developing a chatbot or exploring AI applications, ExLlamaV2 provides the tools you need to leverage the power of LLMs on your own hardware.

Categories:

Ad